Source: amsfrank on Flickr.com.

Web application development has become a really professional industry over the past decades, where professional teams are ensuring web applications get updated in an automated, tested and secured fashion. Concepts like “Continuous Integration”, “Continuous Deployment” and “Automated Testing” are now mainstream in any professional organisation: code gets integrated in a release branch and after that migration commit a whole bunch of automation processes are kicking into gear to test, analyse, migrate and deploy changed features onto production systems.

Let’s look at the automated processes of migrating database changes into production using DBDeploy.

DBDeploy

In comparison to other database migration tools, DBDeploy is not a tool “pur sang”, but more a procedure on how to do things in an automated fashion.

In order to use DBDeploy efficiently, understanding the logic is essential.

In order to track changes, DBDeploy uses a single changelog table in your database to store and updated changes made to your database architecture. The fields for this changelog table are the following:

- Change number: The sequence number of the database change file

- Delta set: A logical grouping of database changes

- Start timestamp: Timestamp of when the changes were started

- Complete timestamp: Timestamp of when the changes were completed

- Applied by: A user identifier to log who made the change

- Description: A small description about what the change is about

DBDeploy uses a delta file to track changes in the database by using a numbered SQL file per delta set (project or feature) that contains the change to the current database structure.

Deltasets

The moment you work on a feature, project or bugfix and you need to make database changes, you can now use small numerated SQL scripts

Let’s assume you have a project called “new api order module” and it uses the database “erp”. When you need to make changes to the active database structure you should create a new directory (called “Delta Set) with the name of the project “erp_new_api_order_module” (using database + project name for clarity) within deploy/deltas directory. So this would be your directory structure:

/path/to/project

/deploy

/deltas

/erp_new_api_order_module

Delta scripts

Delta scripts are SQL snippets to make changes on the current database structure in production. Best results are made when each delta script contains only one change to the schema design, so when something fails the impact is kept to a minimum.

A delta script should have 2 main sections: a section to make the change and a section to undo the change you have made. Best is that you snake_case the filename with a proper description what’s being done.

Example: 0002_add_constraints_for_contacts.sql

-- //0002 Adding constraints for contacts

ALTER TABLE `contact_details`

ADD FOREIGN KEY `contact_id_fk` (`contact_id`)

REFERENCES `contact` (`contact_id`)

ON UPDATE CASCADE

ON DELETE RESTRICT;

ALTER TABLE `contact`

ADD UNIQUE KEY `contact_email_uk` (`contact_email`);

-- //@UNDO

ALTER TABLE `contact`

DROP INDEX `contact_email_uk`;

ALTER TABLE `contact_details`

DROP FOREIGN KEY `contact_id_fk`;

Schema change section

In the first part of the SQL file you describe the functionality as a SQL comment

-- //0002 Adding constrains for contacts

This comment is ignored by MySQL but dbdeploy will use it to provide a description of the change that was executed. So don’t ignore it as it allows us to figure out what has changed!

ALTER TABLE `contact_details`

ADD FOREIGN KEY `contact_id_fk` (`contact_id`)

REFERENCES `contact` (`contact_id`)

ON UPDATE CASCADE

ON DELETE RESTRICT;

ALTER TABLE `contact`

ADD UNIQUE KEY `contact_email_uk` (`contact_email`);

This is the actual change to be made against the existing database structure that will be executed. Preferrably you test this manually against your local database to ensure you haven’t made any mistakes.

Schema change undo section

In the second section of the SQL file wee keep track of our changes and provide an “undo” routine to reverse our changes that we made in this file.

-- //@UNDO

This statement indicatest DBDeploy that this part of the file is considered an undo routine. Note the //@UNDO string: two forward slashes, an at-sign and in capitals the word “UNDO”. This is the convention used by DBDeploy, so really important to follow this requirement.

ALTER TABLE `contact`

DROP INDEX `contact_email_uk`;

ALTER TABLE `contact_details`

DROP FOREIGN KEY `contact_id_fk`;

What follows is a routine to undo the statements you’ve created in the first section. Most of the time this section is used to drop tables, fields or indexes that were created.

NOTE: It’s hard to revert inserts of data in the database, please take care reverting them!

Single row updates can be fairly easy reverted as you always have a reference to what primary key you were making the change, but when you’re updating a bunch of rows, it becomes harder to figure out what their original value was, so best check with production before you’re updating your databse.

Phing

Phing is a build tool comparable to Apache Ant, where you can provide configuration settings, custom targets and an impressive list of build-in tasks. If you haven’t used it before and you’re keen on automating some of your work, you should definitely check it out.

In our case, we use phing to execute our dbdeploy process. By default, phing looks for a build.xml file in the same path phing is called.

<?xml version="1.0" encoding="UTF-8"?>

<project name="DB Deploy for WebsiteNG and Legacy Cron" default="build">

<tstamp/>

<fileset id="deltadirs" dir="${project.basedir}/deltas">

<patternset>

<include name="**/*"/>

<exclude name="**/*.sql"/>

</patternset>

</fileset>

<target name="prepare" description="Fetch configuration settings">

<property file="${project.basedir}/build.properties"/>

<property file="${project.basedir}/local.properties" override="true"/>

</target>

<target name="deploy" description="Deploying deltasets to database" depends="prepare">

<foreach param="delta" absparam="absfilename" target="dbdeploy">

<fileset refid="deltadirs"/>

</foreach>

</target>

<target name="dbdeploy" description="Executing database delta changes" depends="prepare">

<property name="delta" value="test1"/>

<property name="dbdeploy.output-path" value="${project.basedir}/scripts/${delta}"/>

<property name="dbdeploy.mkdir" value="false"/>

<available file="${dbdeploy.output-path}" type="dir" property="dbdeploy.mkdir" />

<echo message="path is available? ${dbdeploy.mkdir}"/>

<if>

<isfalse value="${dbdeploy.mkdir}"/>

<then>

<mkdir dir="${dbdeploy.output-path}"/>

</then>

</if>

<dbdeploy

url="${db.dsn}"

userid="${db.username}"

password="${db.password}"

dir="${project.basedir}/deltas/${delta}"

outputfile="${project.basedir}/scripts/${delta}/deploy-${DSTAMP}-${TSTAMP}.sql"

undooutputfile="${project.basedir}/scripts/${delta}/undo-${DSTAMP}-${TSTAMP}.sql"

deltaset="${delta}"

appliedBy="dbdeploy"/>

<trycatch property="dbdeploy.failure">

<try>

<pdosqlexec

url="${db.dsn}"

userid="${db.username}"

password="${db.password}"

src="${project.basedir}/scripts/${delta}/deploy-${DSTAMP}-${TSTAMP}.sql"

onerror="abort"

encoding="utf8"/>

</try>

<catch>

<echo message="Failed to deploy: ${dbdeploy.failure}"/>

<phingcall target="rollback" />

</catch>

</trycatch>

</target>

<target name="rollback" description="Reverses any commits that were made" depends="prepare">

<trycatch property="rollback.failure">

<try>

<pdosqlexec

url="${db.dsn}"

userid="${db.username}"

password="${db.password}"

src="${project.basedir}/scripts/${delta}/undo-${DSTAMP}-${TSTAMP}.sql"

onerror="continue"

encoding="utf8"/>

</try>

<catch>

<echo message="Failed to rollback: ${dbdeploy.failure}"/>

<phingcall target="rollback" />

</catch>

</trycatch>

<echo message="Rolling back with ${delta}"/>

</target>

<target name="check" description="Check the changelog for delta updates" depends="prepare">

<echo message="Displaying latest deltasets on database"/>

<pdosqlexec

url="${db.dsn}"

userid="${db.username}"

password="${db.password}"

onerror="continue"

encoding="utf8">

<formatter type="plain" usefile="false" showheaders="false" coldelim=": "/>

<transaction>

SELECT `delta_set`, MAX(`change_number`) AS `last_delta` FROM changelog GROUP BY `delta_set`;

</transaction>

</pdosqlexec>

</target>

<target name="build" description="Starting automated deployments" depends="prepare, deploy">

</target>

</project>

The default target is “build” which depends on targets “prepare” and “deploy”. The “prepare” target will load the global configuration settings build.properties file and if present also the local configuration settings local.properties file.

These configuration files just contain key/value pairs that we use within our build.xml phing script to load global and local settings. In the case for DBDeploy it only contains database settings.

Example: build.properties

db.dsn = mysql:host=127.0.0.1;port=3306;dbname=test;charset=utf8

db.username = dbdeploy

db.password = dbdeploy

These settings are then used in our “deploy” target to execute our database changes. If for some reason something goes wrong, there’s a target called “rollback” that will execute the undo statements and ensures the changelog table is not updated.

Running phing on commandline

If you don’t have installed phing globally, you can download the latest phing.phar from https://www.phing.info/get/phing-latest.phar and put it in the location of your build.xml.

wget -O phing.phar https://www.phing.info/get/phing-latest.phar

To run DBDeploy, all you need to do is running phing.

php phing.phar

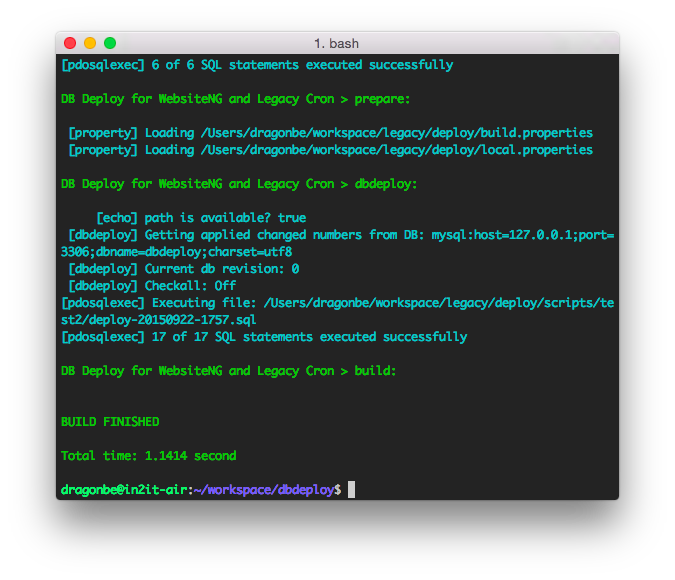

This will produce something similar like the following:

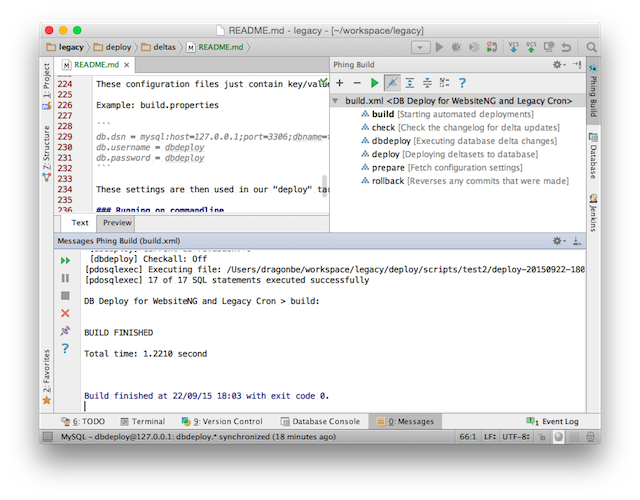

Executing phing within PHPStorm

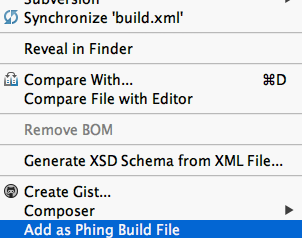

PHPStorm has a neat feature where you can set your build.xml as Phing Build file. In your navigator pane, right-click on your build.xml file to get the context menu popup. You’ll see it as last menu item.

Once added, you now get a nice popup for phing. All you need to do is hit the green arrow!

Adding dbdeploy into your CI build pipeline

If you’re a fan of “Continuous Integration” and “Continuous Delivery”, then you’re absolutely ready to automate the migration of your databases. Yes, automatically update your live databases! I know it sounds scary, but less scary considering all the things that can happen when you deploy manually.

In your CI system of preference create a seperate project “DBDeploy” as we want to set it up as part of a building pipeline. This means: we don’t execute “DBDeploy” if our previous assigned projects failed and we only continue if they succeeded.

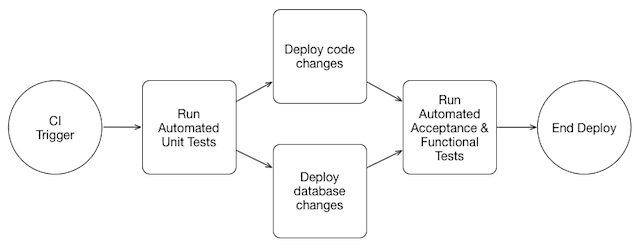

Below is a really simplified overview of a CI build pipeline, where the automated deployment of database changes can occur at the same time as the “release” of code changes onto the server.

NOTE: This is a simplified example that is never useful in production environments!

Additional advice

The concept of DBDeploy in this scenario is for migrating changes to a mysql database, but you can also apply this on other database engines, even NoSQL data storages.

A good approach to figure out how to best apply this automation technique in your situation, review the steps you take now to manually migrate your database(s) and list them up as a checklist. It might look like the following example:

With phing you can automate these steps and have a repetitive deployment procedure that will allways execute the same tasks every time it is run, even in stressful moments where emergency deployments are required.